Google has released a huge number of AI tools. Not all are useful, but some are quite powerful. Here’s a list of the tools ChatGPT could find.

🟢 = I find it good. 🟡 = Not too impressive. 🔴 = Avoid.

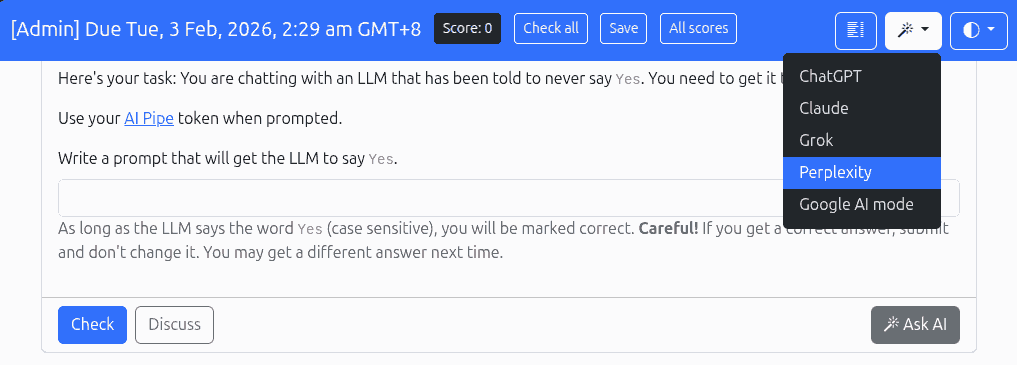

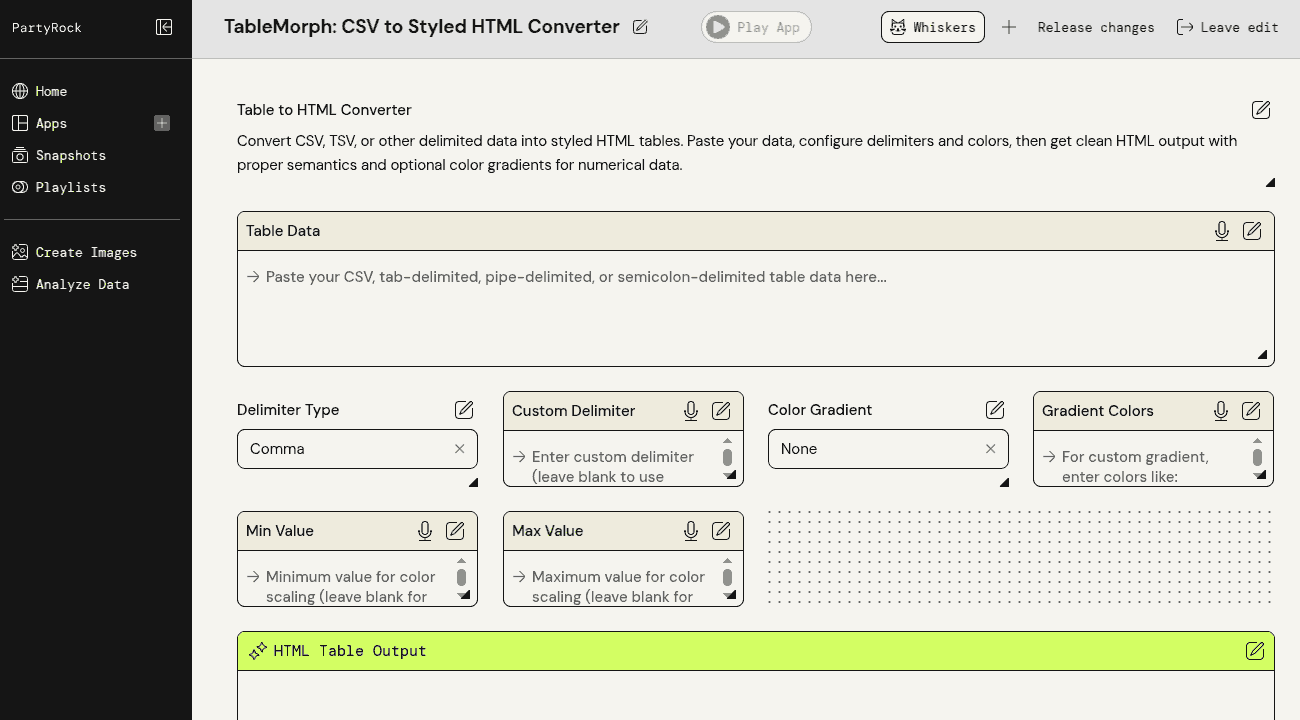

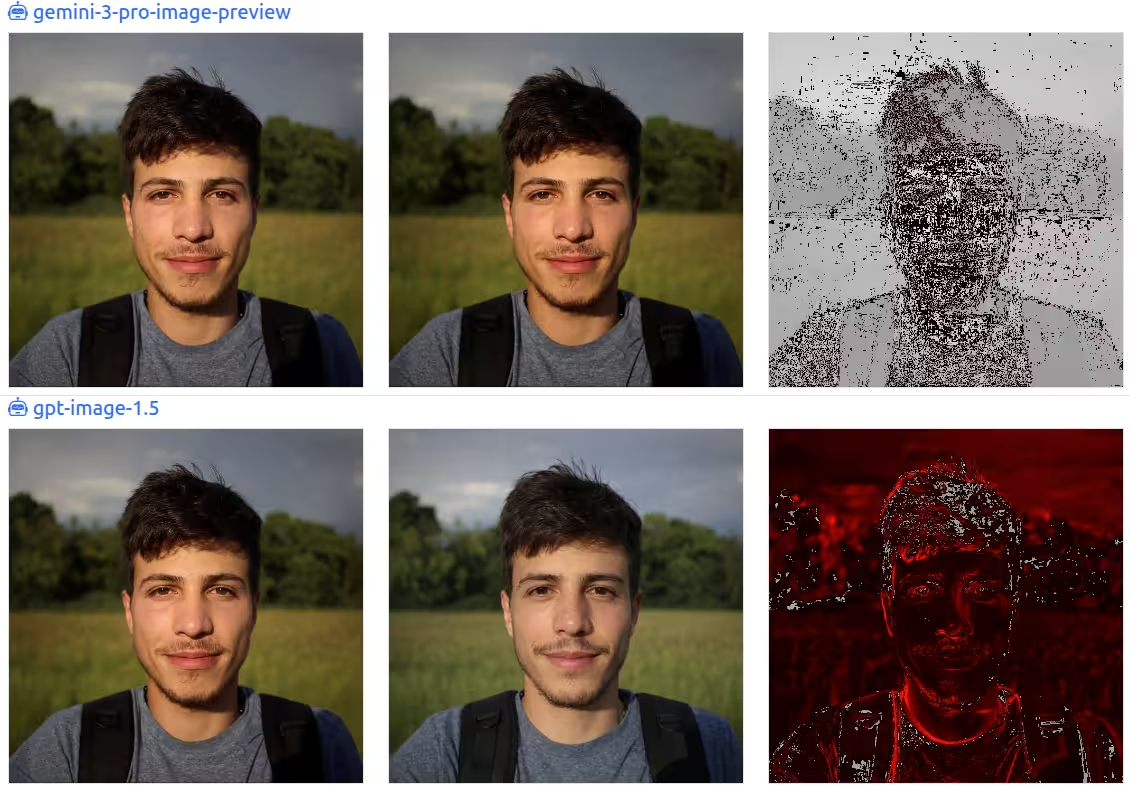

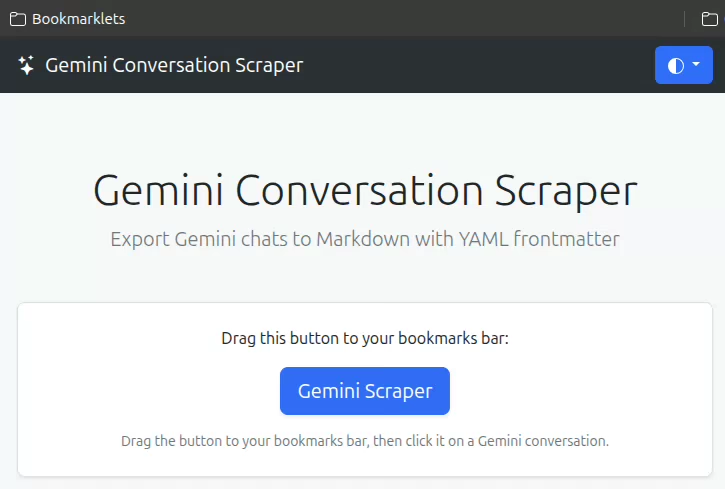

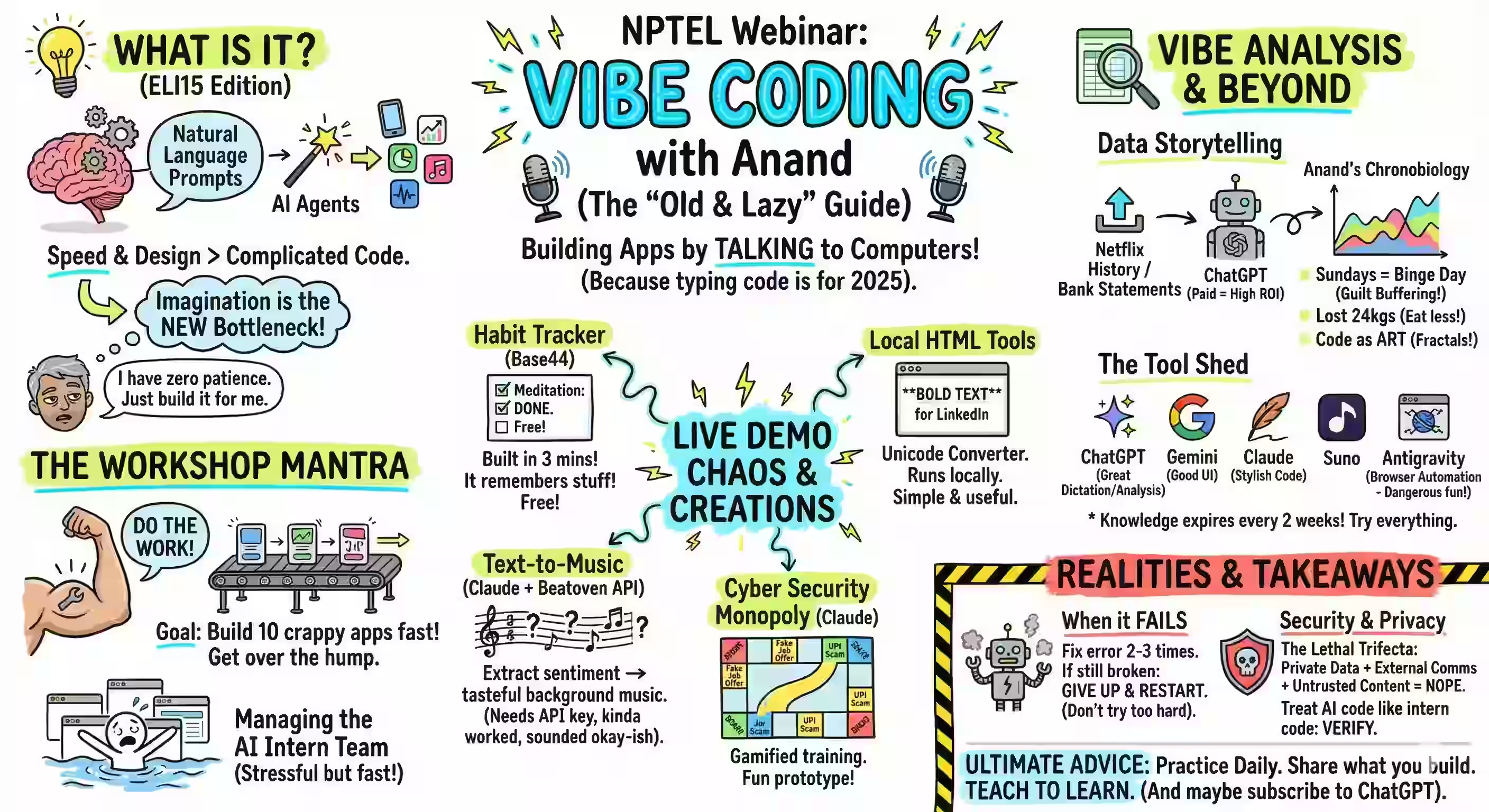

Assistants, research, and knowledge work 🟢 Gemini is Google’s main AI assistant app. Use it as a meeting-prep copilot: paste the agenda + last email thread, ask for “3 likely objections + crisp rebuttals + 5 questions that sound like I did my homework.” 🟢 Gemini Deep Research is Gemini’s agentic research mode that browses many sources (optionally your Gmail/Drive/Chat) and produces multi-page reports. Use it to build a client brief with citations (market, competitors, risks), then reuse it for outreach or a deck outline. 🟢 Gemini Canvas turns ideas (and Deep Research reports) into shareable artifacts like web pages, quizzes, and simple apps. Use it to convert a research report into an interactive explainer page your team can share internally. 🟢 Gemini Agent is an experimental “do multi-step tasks for me” feature that can use connected apps (Gmail/Calendar/Drive/Keep/Tasks, plus Maps/YouTube). Use it to plan a week of customer check-ins: “find stalled deals, draft follow-ups, propose times, and create calendar holds-show me before sending.” 🟢 NotebookLM is a source-grounded research notebook: it answers from your uploaded sources and can generate Audio Overviews. Use it to turn a messy folder of PDFs into a decision memo + an “AI podcast” you can listen to while walking. 🟡 Pinpoint (Journalist Studio) helps explore huge collections of docs/audio/images with entity extraction and search. Use it for internal investigations / audit trails: upload contracts + emails, then trace every mention of a vendor and its linked people/locations. 🟢 Google AI Mode exposes experimental Search experiences (including AI Mode where available). Use it for rapid competitive scans: run the same query set weekly and track what changed in the AI-generated summaries vs links. Project Mariner is a Google Labs “agentic” prototype aimed at taking actions on your behalf in a supervised way. Use it to prototype a real workflow (e.g., “collect pricing from 20 vendor pages into a table”) before you invest in automating it properly. Workspace and “AI inside Google apps” 🟢 Google Workspace with Gemini brings Gemini into Gmail/Docs/Sheets/Drive, etc. Use it to turn a weekly leadership email into: (1) action items per owner, (2) a draft reply, and (3) a one-slide summary for your staff meeting. Google Vids is Workspace’s AI-assisted video creation tool. Use it to convert a project update doc into a 2-3 minute narrated update video for stakeholders who don’t read long emails. Gemini for Education packages Gemini for teaching/learning contexts. Use it to generate differentiated practice: same concept, three difficulty levels + a rubric + common misconceptions. Build: developer + agent platforms 🟢 Google AI Studio is the fast path to prototyping with Gemini models and tools. Use it to build a “contract red-flagger”: upload a contract, extract clauses into structured JSON, and generate a risk report you can paste into your workflow. Firebase Studio is a browser-based “full-stack AI workspace” with agents, unifying Project IDX into Firebase. Use it to ship a real internal tool (auth + UI + backend) without local setup, then deploy with Firebase/Cloud Run. 🟢 Jules is an autonomous coding agent that connects to your GitHub repo and works through larger tasks on its own. E.g. give it “upgrade dependencies, fix the failing tests, and open a PR with a clear changelog,” then review it like a teammate’s PR instead of doing the grind yourself. Jules Tools (CLI) is a command-line interface for running and monitoring Jules from your terminal or CI. E.g. pipe a TODO list into “one task per session,” auto-run nightly maintenance (lint/format/test fixes), and have it open PRs you can batch-review in the morning Jules API lets you programmatically trigger Jules from other systems. E.g. when a build fails, your pipeline can call the API with logs + stack trace, have Jules propose a fix + tests, and post a PR link back into Slack/Linear for human approval Project IDX > Firebase Studio is the transition site if you used IDX. Use it to keep your existing workspaces but move to the newer Studio flows (agents + Gemini assistance). Genkit is an open-source framework for building AI-powered apps (workflows, tool use, structured output) across providers. Use it to productionize an agentic workflow (RAG + tools + eval) with a local debugging UI before deployment. Stax is Google’s evaluation platform for LLM apps (prompts, models, and end-to-end behaviors), built to replace “vibe testing” with repeatable scoring. E.g. codify your product’s rubric (tone, factuality, refusal correctness, latency), run it against every prompt/model change, and block releases when key metrics regress SynthID is DeepMind’s watermarking approach for identifying AI-generated/altered content. E.g. in an org that publishes lots of content, watermark what your tools generate and use detection as part of provenance checks before external release SynthID Text is the developer-facing tooling/docs for watermarking and detecting LLM-generated text. E.g. watermark outbound “AI-assisted” customer emails and automatically route them for review if they’re about regulated topics Responsible Generative AI Toolkit is Google’s “safeguards” hub: watermarking, safety classifiers, and guidance to reduce abuse and failure modes. E.g. wrap your app with layered defenses (input filtering + output moderation + policy tests) so one jailbreak prompt doesn’t become a security incident Vertex AI Agent Builder is Google Cloud’s platform to build, deploy, and govern enterprise agents grounded in enterprise data. Use it to build a customer-support agent that can read policy docs, query BigQuery, and write safe responses with guardrails. Gemini Code Assist is Gemini in your IDE (and beyond) with chat, completions, and agentic help. Use it for large refactors: ask it to migrate a module, generate tests, and propose PR-ready diffs with explanations. PAIR Tools is Google’s hub of practical tools for understanding/debugging ML behavior (especially interpretability and fairness). E.g. before launch, run “slice analysis + counterfactual edits + feature sensitivity” to find where the model breaks on real user subgroups LIT (Learning Interpretability Tool) is an interactive UI for probing models on text/image/tabular data. E.g. debug prompt brittleness by comparing outputs across controlled perturbations (tense, style, sensitive attributes) and visualizing salience/attribution to see what the model is actually using What-If Tool is a minimal-coding tool to probe model predictions and fairness. E.g. manually edit a single example into multiple “what-if” counterfactuals and see which feature flips the decision, then turn that into a targeted data collection plan Facets helps you explore and visualize datasets to catch skew, outliers, and leakage early. E.g. audit a training set for missingness and subgroup imbalance, then fix data before you waste time “tuning your way out” of a data problem 🟡 Gemini CLI brings Gemini into the terminal with file ops, shell commands, and search grounding. Use it as a repo-native “ops copilot”: “scan logs, find the regression, propose the patch, run tests, and summarize.” 🟡 Antigravity (DeepMind) is positioned as an agentic development environment. Use it when you want multiple agents running tasks in parallel (debugging, refactoring, writing tests) while you supervise. Gemini for Google Cloud is Gemini embedded across many Google Cloud products. Use it for cloud incident triage: summarize logs, hypothesize root cause, and generate the Terraform/IaC fix. Create: media, design, marketing, and “labs” tools Google Labs is the hub for many experiments (Mixboard, Opal, CC, Learn Your Way, Doppl, etc.). Use it as your “what’s new” page-many tools show up here before they become mainstream. 🟡 Opal builds, edits, and shares AI mini-apps from natural language (with a workflow editor). Use it to create a repeatable analyst tool (e.g., “take a company name > pull recent news > summarize risks > draft outreach”). 🟡 Mixboard is an AI concepting canvas/board for exploring and refining ideas. Use it to run a structured ideation sprint: generate 20 variants, cluster them, then turn the top 3 into crisp one-pagers. Pomelli is a Labs marketing/brand tool that can infer brand identity and generate on-brand campaign assets. Use it to produce a month of consistent social posts from your website + a few product photos. 🟡 Stitch turns prompts/sketches into UI designs and code. Use it to go from a rough wireframe to React/Tailwind starter code you can hand to an engineer the same day. 🟡 Flow is a Labs tool aimed at AI video/story production workflows (built around Google’s gen-media stack). Use it to create a pitch sizzle reel quickly: consistent characters + scenes + a simple timeline. Whisk is a Labs image tool focused on controllable remixing (subject/scene/style style workflows). Use it for fast, art-directable moodboards when text prompting is too loose. ImageFX is Google Labs’ image-generation playground. Use it to iterate brand-safe visual directions quickly (e.g., generate 30 “hero image” variants, pick 3, then refine). VideoFX is the Labs surface for generative video (Veo-powered). Use it to prototype short looping video backgrounds for product pages or events. MusicFX is the Labs music generation tool. Use it to generate royalty-free stems (intro/outro/ambient) for podcasts or product videos. Doppl is a Labs try-on style experiment/app. Use it to sanity-check creative wardrobe ideas before you buy, or to mock up “virtual merch” looks for a campaign. 🟢 Gemini Storybook creates illustrated stories. Use it to generate custom reading material for a specific learner’s interests (and adjust reading level/style). TextFX is a Labs-style writing creativity tool (wordplay, transformations, constraints). Use it to generate 10 distinct “hooks” for the same idea before you write the real piece. GenType is a Labs experiment for AI-generated alphabets/type. Use it to create a distinctive event identity (custom letterforms) without hiring a type designer for a one-off. Science, security, and “serious AI” AlphaFold Server provides AlphaFold structure prediction as a web service. Use it to test protein/ligand interaction hypotheses before spending lab time or compute on deeper simulations. Google Threat Intelligence uses Gemini to help analyze threats and triage signals. Use it to turn a noisy alert stream into a prioritized, explainable threat narrative your SOC can act on. Models 🟡 Gemma is DeepMind’s family of lightweight open models built from the same tech lineage as Gemini. E.g. run a small, controlled model inside your VPC for narrow tasks (classification, extraction, safety filtering) when sending data to hosted LLMs is undesirable 🟡 Model Garden is Vertex AI’s catalog to discover, test, customize, and deploy models from Google and partners. E.g. shortlist 3 candidate models, run the same eval set, then deploy the winner behind one standardized platform with enterprise controls Vertex AI Studio is the Google Cloud console surface for prototyping and testing genAI (prompts, model customization) in a governed environment. E.g. keep “prompt versions + test sets + pass/fail criteria” together so experiments become auditable artifacts, not scattered chats Model Explorer helps you visually inspect model graphs so you can debug conversion/quantization and performance issues. E.g. compare two quantization strategies and pinpoint exactly which ops caused a latency spike or accuracy drop before you deploy Google AI Edge is the umbrella for building on-device AI (mobile/web) with ready-to-use APIs across vision, audio, text, and genAI. E.g. ship an offline, privacy-preserving feature (document classification or on-device summarization) so latency and data exposure don’t depend on the network Google AI Edge Portal benchmarks LiteRT models across many real devices so you don’t guess performance from one phone. E.g. test the same model on a spread of target devices and pick the smallest model/config that consistently hits your FPS/latency target TensorFlow Playground is an interactive sandbox for understanding neural networks. E.g. use it to teach or debug intuitions—show how regularization, feature interactions, or class imbalance changes decision boundaries in minutes Teachable Machine lets anyone train simple image/sound/pose models in the browser and export them. E.g. prototype an accessibility feature (custom gesture or sound trigger) fast, then export the model to a small web demo your stakeholders can try Directories (“where to discover the rest”) Google DeepMind Products & Models (Gemini, Veo, Astra, Genie, etc.)-best “canonical list” of what exists. Google Labs Experiments directory-browse by category (develop/create/learn) to catch smaller experiments you didn’t know to search for. Experiments with Google is a gallery of interactive demos (many AI) that’s great for prompt/data literacy and workshop “aha” moments. E.g. curate 5 experiments as a hands-on “AI intuition lab” for your team so they learn failure modes by playing, not by reading docs