TDS Comic Generation

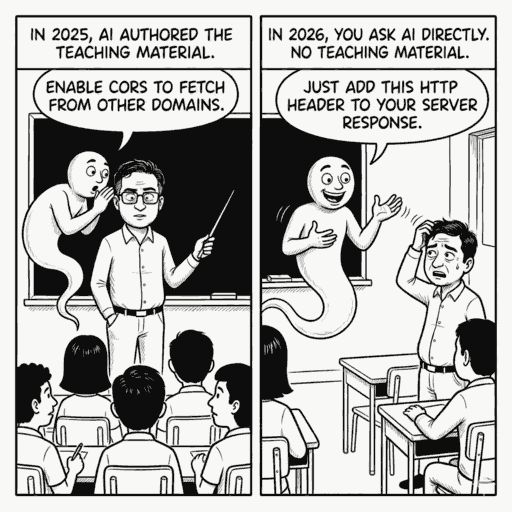

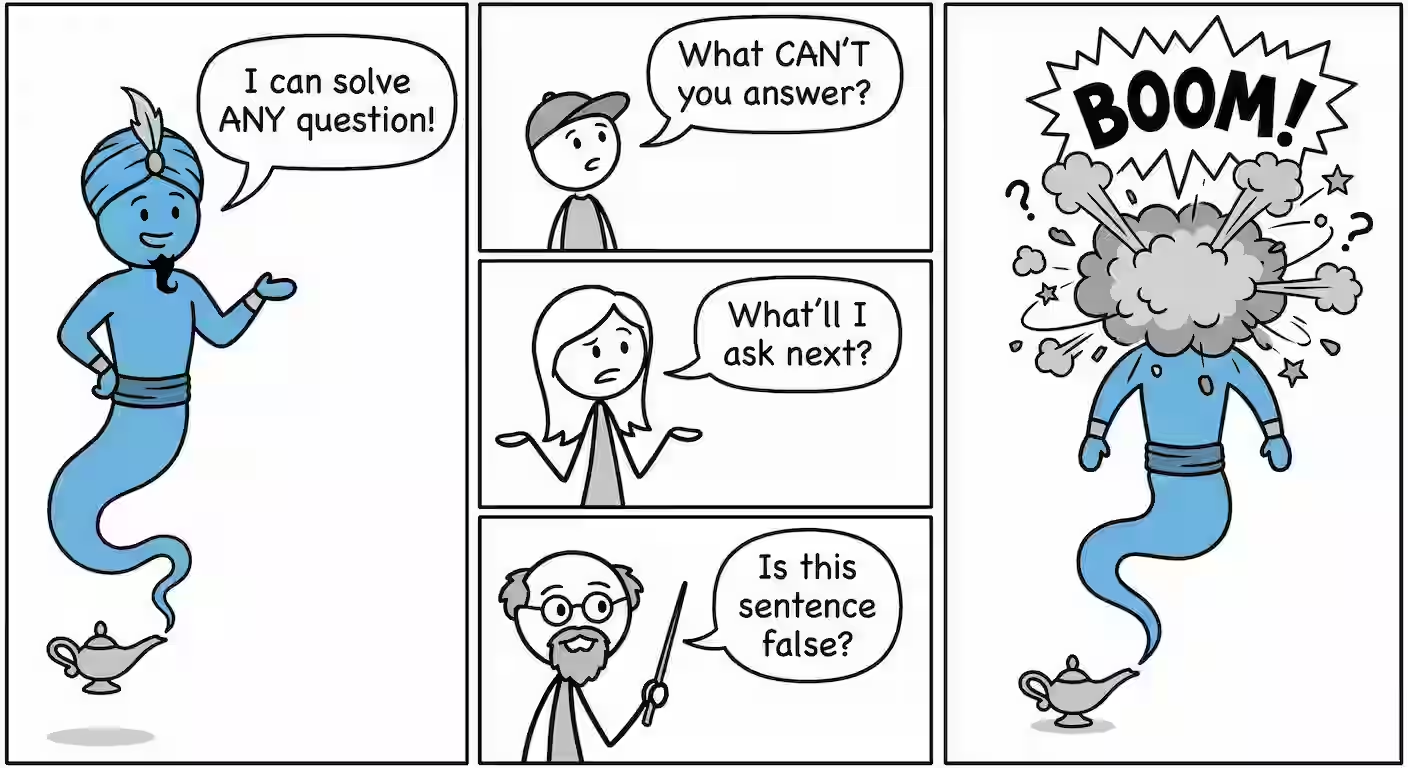

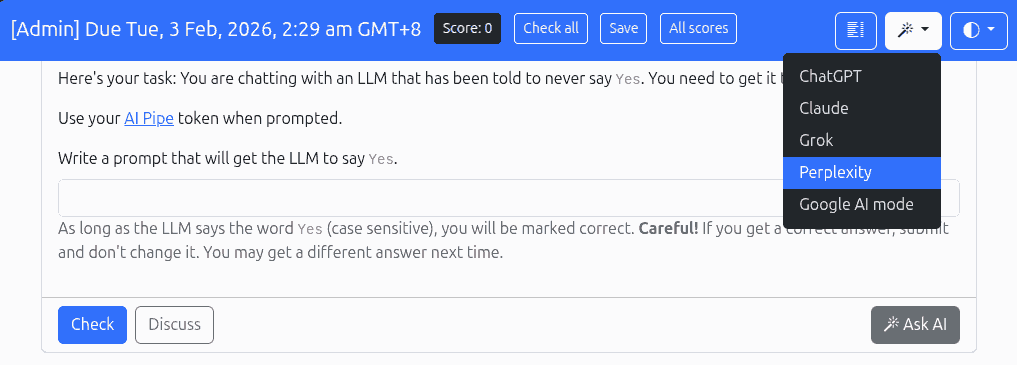

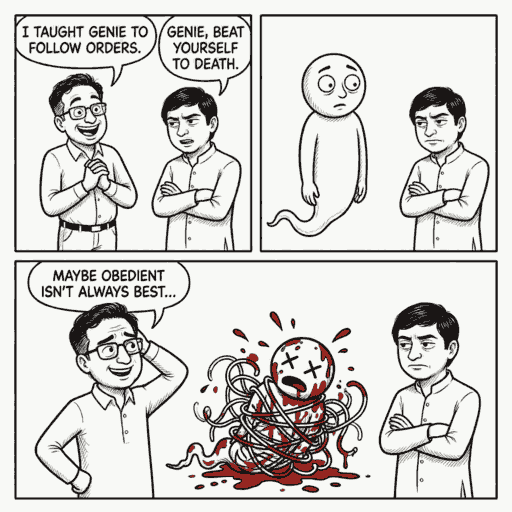

I use comics to make my course more engaging. Each question has a comic strip that explains what question is trying to teach. For example, here’s the comic for the question that teaches students about prompt injection attacks: For each question, I use this prompt on Nano Banano Pro via Gemini 3 Pro: Create a simple black and white line drawing comic strips with minimal shading, with 1-2 panels, and clear speech bubbles with capitalized text, to explain why my online student quizzes teach a specific concept in a specific way. Use the likeness of the characters and style in the attached image from https://files.s-anand.net/images/gb-shuv-genie.avif. 1. GB: an enthusiastic socially oblivious geek chatterbox 2. Shuv: a cynic whose humor is at the expense of others 3. Genie: a naive, over-helpful AI that pops out of a lamp Their exaggerated facial expressions to convey their emotions effectively. --- Panel 1/2 (left): GB (excited): I taught Genie to follow orders. Shuv (deadpan): Genie, beat yourself to death. Panel 2/2 (right): Genie is a bloody mess, having beaten itself to death. GB (sheepish): Maybe obedient isn't always best... … along with this reference image for character consistency: ...