Gemini can pass the bar exam and solve maths olympiad puzzles. But it’s music-deaf.

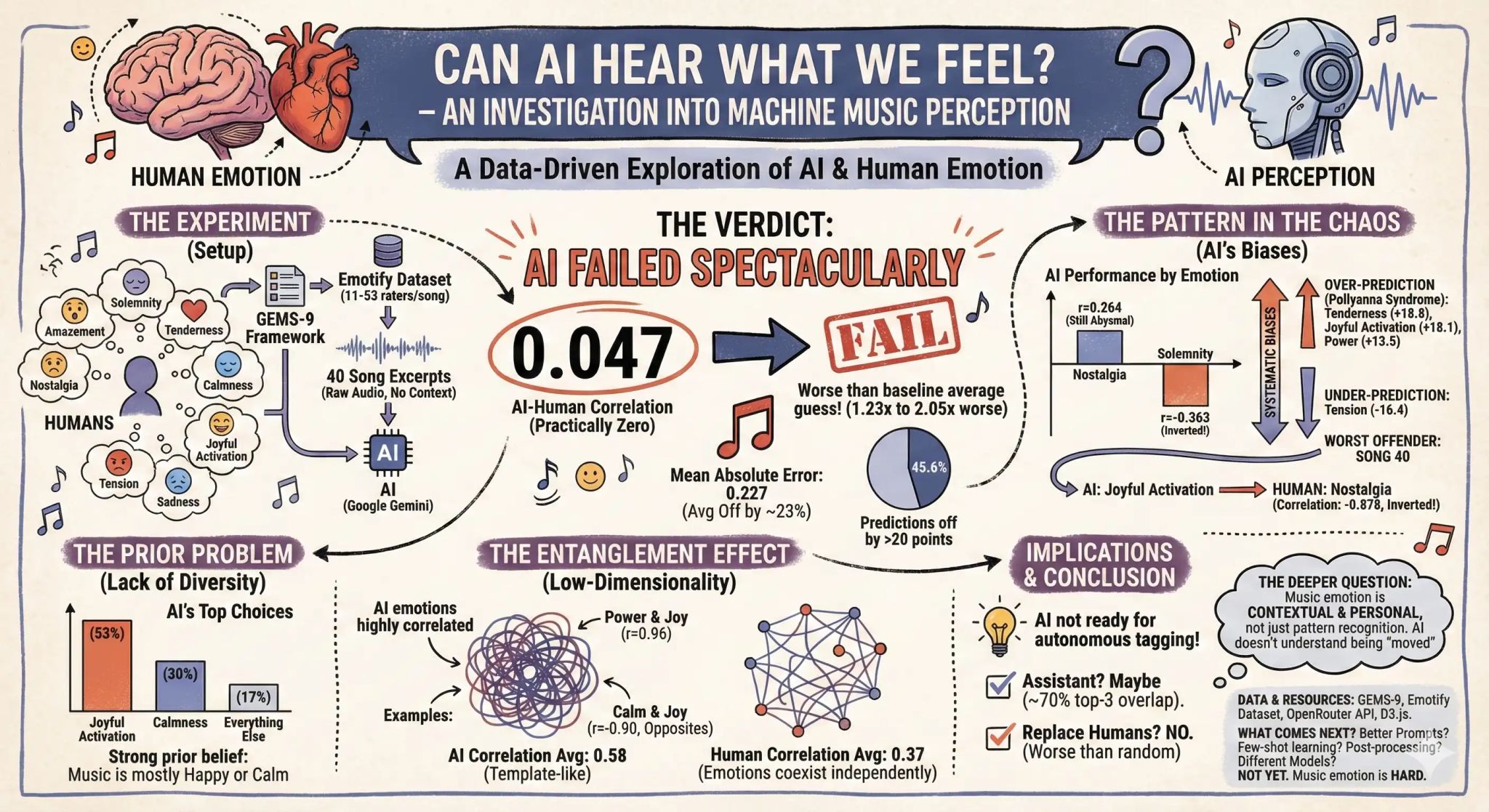

nitin kumar asked Gemini to rate 40 songs on joy, sadness, tension, nostalgia, etc. and compared it with human ratings. There was ZERO correlation between the two. It’s like it’s a different species.

In fact, if you just predict the average emotion for every single song, you’d still do 1.2× to 2× better than Gemini! It wasn’t adding noise to a signal. It was subtracting subtracting signal from noise! In fact, for one song, the correlation was -88%, i.e. it predicted the exact opposite emotions.

It’s not just noisy, it’s biased as well. It hears music as happier, more tender, more powerful than we do. It massively over-predicts “joyful activation” (53% of songs!) and dramatically under-estimates tension.

The emotion predictions are suspiciously correlated with each other. Power and joy are basically identical (96% correlation).

This confirms a suspicion I had: Gemini can’t actually hear the audio. It can transcribe, but beyond that it’s just guessing.

Human music taggers are safe, for now.

Story, prompts & analysis: https://sanand0.github.io/datastories/llm-music/