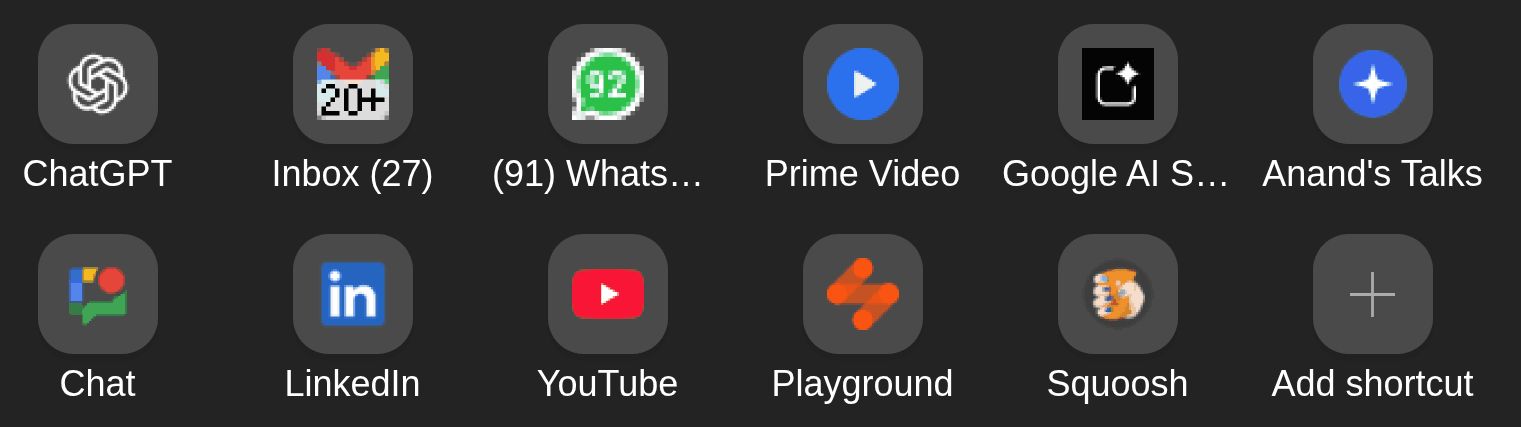

The 11 sites I visit most:

ChatGPT. It’s replaced Google as my default knowledge source. I prefer it over Gemini, Claude, etc. because the app has good features (memory from past conversations, code interpreter, strong voice mode, remote MCP on web app, etc.) The OpenAI models have pros and cons, but the app features are ahead of competition. Gmail. It’s my work inbox. Interestingly, I check it more (and respond faster) than social channels (e.g. WhatsApp, Google Chat, LinkedIn). It also doubles up as my task queue. WhatsApp. It’s my default phone + messaging app. A fair bit of my work communication happens here, too. Prime Video. I mainly watch The Mentalist. Totally love Patrick Jane! Google AI Studio. Mostly for transcription. It’s better than Gemini on UI, ability to handle uploads, file-formats, etc. It’s also free (though the data is used for training.) My Talks page: https://sanand0.github.io/talks/. I give 1-1.5 talks a week, mostly on AI/ML topics. I use Marp to render Markdown slides and publish it here. Google Chat. It’s Straive’s social channel. I can’t use it from my phone, so I log in only if I need to check if I missed something. LinkedIn. It’s where I post by default. I don’t use it for networking and only connect with people I’ve met and know well. YouTube. Mostly for movie clips over dinner. I occasionally watch educational content. LLM Foundry: https://llmfoundry.straive.com/. LLM Foundry is Straive’s internal gateway to multiple model APIs (I built it). I use it to experiment with models, grab API keys, and demo LLMs to clients. Squoosh. I compress every image, every time. Mostly into WebP (hands-down the best format today), typically lossless with an 8-color palette, or lossy at ~0-10% quality for photos. The list will change. But the reasons probably won’t: fast, simple, automatable, and practical (for me).

...