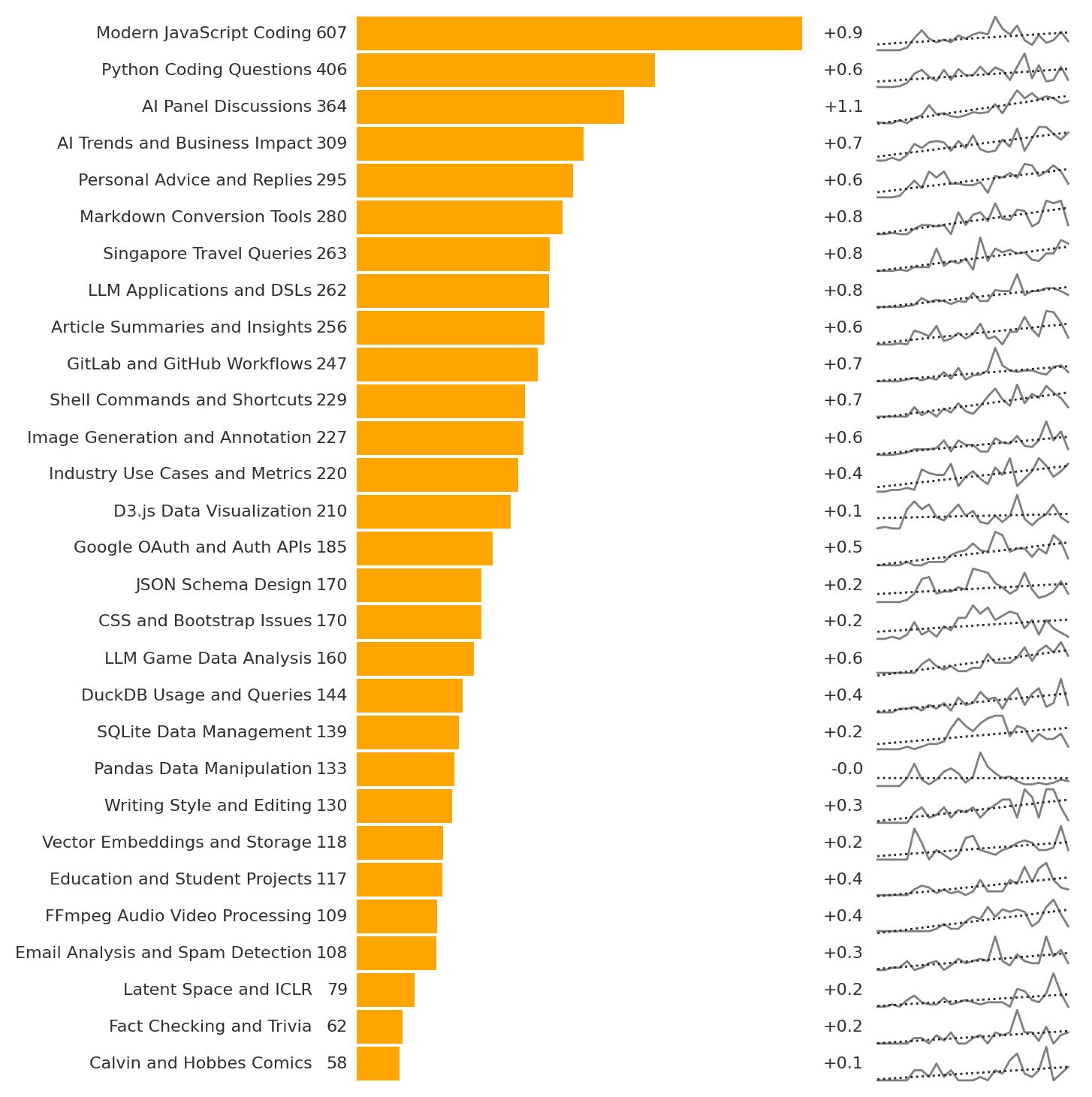

Here’s how I use ChatGPT, based on the ~6,000 conversations I’ve had in 2 years. My top use, by far, is for technology. “Modern JavaScript Coding” and “Python Coding Questions” are ~30% of my queries. There’s a long list with Markdown, GitLab, GitHub, Shell, D3, Auth, JSON, CSS, DuckDB, SQLite, Pandas, FFMPeg, etc. featured prominently. Next is to brainstorm AI use: “AI Panel Discussions”, “AI Trends and Business Impact”, “LLM Applications and DSLs”, “Industry Use Cases and Metrics” are also fast growing categories. I brainstorm talk outlines, refine slide deck narratives, and plan business ideas. ...